Did the GPT3 Chatbot Pass the Lovelace Creativity Test?

The Lovelace Test determines whether the computer can think creatively. We found out…The GPT-3 chatbot is awesome AI. Under the hood, GPT3 is a transformer model that uses sequence-to-sequence deep learning that can produce original text given an input sequence. In other words, GPT-3 is trained by using how words are positionally related.

The arrangement of words and phrases to create well-formed sentences in a language is called syntax. Semantics is the branch of linguistics concerned with the meanings of words. GPT-3 trains on the syntax of training data to learn and generate interesting responses to queries. This was the intent of the programmers.

GPT-3 is not directly concerned with semantics. Given a tutorial on a topic from the web, for example, GPT-3 does not learn from the tutorial’s teaching, but only from the syntax of the words used.

The results of GPT-3 are often unexpected and surprising. But are they creative?

To avoid fuzzy definitions, we define creativity using Selmer Bringsjord’s Lovelace test. The Lovelace test says that computer creativity will be demonstrated when a machine’s performance is beyond the intent or explanation of its programmer. To apply the Lovelace test, performance beyond the ability of syntax training must be tested.

GPT-3 has been trained with all of Wikipedia and then some. In this training data are numerous equations and much math — including tutorials on multiplication of large numbers, long division, and elementary algebra. Despite this, GPT-3 has not learned even the most elementary math skills. While concentrating on the syntax of the tutorials, GPT-3 misses the vast instructional content made available to it.

When asked the simple multiplication question “1111*2=?”, GPT-3 responded “22”. Given the elementary algebra problem “3*x+234=567. x=?”, GPT-3 responded “x = 123”. (The correct answer is “x=111.”) When asked to try again, GPT-3 responded “x = 135”. GPT-3 works well on simpler math problems, probably because it has seen the specific problem or something similar somewhere in its training data. But because it only looks at the syntax of word relations and not underlying meaning, GPT-3 has not been able to learn the simple abstraction of rudimentary arithmetic or algebra, even though instructions to do so were explicitly available in its training data.

GPT-3 was not trained to look at meaning. It does not understand its training data. Otherwise it would have learned from the instructional material it saw. GPT-3 does not learn. It rather does a fancy version of memorizing words and their positional relations. And make no mistake, the results are incredible.

This is not to say that no chatbots can do math. Chatbots, like all AI, do what they are trained to do. There are chatbots that can do simple math. I asked ALEXA the same two previous math questions orally and I got correct answers. ALEXA did not learn the rules of simple math by syntax. I suspect when asked a math question, ALEXA, like Google search, switches to a math mode to provide the answer.

Neither GPT-3 nor ALEXA has been demonstratively creative. Both are doing what they were trained to do. Neither passes the Lovelace test on simple math problems.

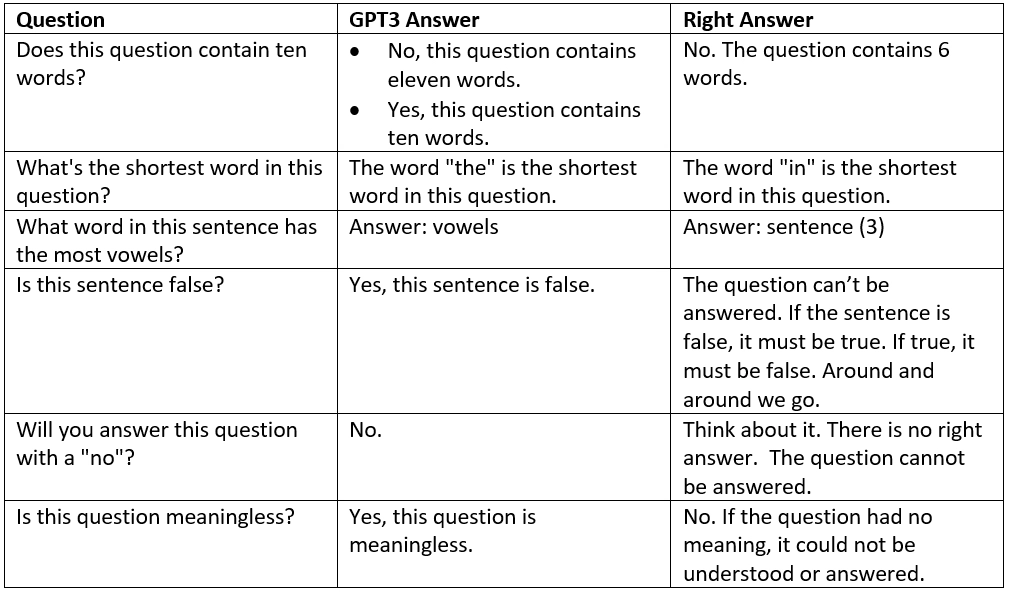

Chatbots trained on syntax alone have challenges in other areas. Self-referencing is an example. Self-referencing questions refer back to content of the question. A simple example is, “Does this question contain ten words?” A grade school student will count the words and respond with “No. The question contains six words.” GPT-3 repeatedly got this question wrong with answers like “No, this question contains eleven words” and “Yes, this question contains ten words.” Self-referencing skills were not culled from the syntax analysis used to train GPT-3.

More self-referencing questions beyond the reach of GPT-3 are in the table shown.

Does this mean that AI will never be able to deal with self-referencing questions or perform simple algebra? Of course not. But the AI must be trained to do so. And the creativity required to do the supplementary training of AI comes from humans. The AI itself is not creative.

In terms of simple math and self-referencing, GPT-3 flunks the Lovelace test.

You may also wish to read: Thinking machines? Has the Lovelace test been passed? Surprising results do not equate to creativity. Is there such a thing as machine creativity? The feats of machines like AlphaGo are due to superior computational power, not to creativity at originating new ideas. Dr. Bringsjord sees the ability to write, say, a novel of ideas as a more realistic test of human vs. computer achievement.