Abstract

We present an inverse technique to determine particle-size distributions by training a layered perception neural network with optical backscattering measurements at three wavelengths. An advantage of this approach is that, even though the training may take a long time, once the neural network is trained the inverse problem of obtaining size distributions can be solved speedily and efficiently.

© 1990 Optical Society of America

Previously, methods such as smoothing and statistical and Backus–Gilbert inversion techniques were used to find profiles of particle distributions.[1]–[4] The smoothing technique requires a judicious choice of two parameters that control the smoothness of the solution. Statistical inversion techniques require prior knowledge of the statistical properties of the unknown function and the measurement errors. The Backus–Gilbert technique requires a good compromise between the spread and the variance.

In this Letter we present an alternative method based on an artificial neural network technique. The neural network technique offers a different approach in that the network memorizes the experience gained by the training; even though the training may take a long time, once the training is done the inversion can be performed instantly. As an example, we use a simple inverse problem to find particle distribution by using single scattering. We use this example to demonstrate that this technique has a potential for more general multiple scattering problems.

We consider the inverse problem of finding the particle-size distribution from the measurements of backscattered light on an optically thin medium containing particles. The first-order scattering approximation is used. The measured quantity is the backscattered intensity β(λi) at three different wavelengths λi(i = 1, 2, 3), and it is related to the size-distribution function n(r) by a Fredholm integral equation of the first kind as

where m is the particle refractive index, r is the radius of the particle, and K(λi, m, r) is the backscattering cross section.[5] We assume that the particles are spherical so that the backscattering cross section can be computed by the Mie solution. The inversion problem is to find the distribution, n(r), from β(λi) measurements. In real-life remote-sensing applications, a large amount of data is collected continually, and it is important to develop a speedy inversion algorithm. The neural network technique presented in this Letter can perform speedy inversion once the neural network is trained.Here we utilize a layered perceptron neural network to determine particle-size distributions.[6] The size-distribution function is assumed to be a log-normal distribution function so that it is characterized by the mean radius rm and the standard deviation σ. The inverse problem is to obtain rm and σ for given β(λi). Although β(λi) is linearly related to the distribution n(r), the relationship between input β(λi) and rm and σ is nonlinear. Earlier techniques such as the Backus–Gilbert technique can handle only linear inversion. It is also our purpose here to demonstrate that the neural network can perform a nonlinear inversion problem. The inverse problem by Kitamura,[6] however, is to obtain n(r) at 31 points, and therefore the relationship between input and output is linear. We also found that β(λi)'s can become close together for some rm and σ, resulting in a nonunique inverse solution. An algorithm is presented to find the ranges from rm and σ so that unique solutions can be obtained. Finally we show that increasing the number of iterations in training the neural network causes the inverse solutions to tend to converge to the real value.

An artificial neural network can be defined as a highly connected array of elementary processors called neurons. Here we consider the multilayer perceptron (MLP) type of artificial neural network.[7]–[11]

As shown in Fig. 1, the MLP-type neural network consists of one input layer, one or more hidden layers, and one output layer. Each layer employs several neurons, and each neuron in the same layer is connected to the neurons in the adjacent layer with different weights. A schematic diagram of this model is depicted in Fig. 1. We use three inputs [β(λ1), β(λ2), β(λ3)] and two output neurons (rm, σ). Signals pass from the input layer, through the hidden layers, to the output layer. Except in the input layer, each neuron receives a signal that is a linearly weighted sum of all the outputs from the neurons of the former layer. The neuron then produces its output signal by passing the summed signal through the sigmoid function 1/(1 + e−x).

The backpropagation learning algorithm is employed for training the neural network. Basically this algorithm uses the gradient descent algorithm to get the best estimates of the interconnected weights, and the weights are adjusted after every iteration. The iteration process stops when a minimum of the difference between the desired and actual output is searched by the gradient descent algorithm.[10],[11]

We consider the backscattering of light from a volume distribution of spherical particles with 31 radii ranging from 0.01 to 40 μm. We assume that the size-distribution function n(r) is governed by the log-normal function so that it is characterized by two quantities: the mean radius rm and the standard deviation σ. Therefore it is given by

First we conduct a study of the forward problem of finding β(λi) for various rm and σ. Since the radius of particles varies from 0.01 to 40 μm, i.e., −2 ≤ log(r) ≤ 1.66, the ranges for rm and σ are chosen so that −1 ≤ log(rm) ≤ 0.44 and 0.03 ≤ log(σ) ≤ 1. Thus the actual size of particles ranging from (rm/σ) to rmσ will be within the range for r. The inverse problem is generally nonunique in getting rm and σ for given β(λi) if rm and σ are allowed as in the above ranges. In what follows, we restrict the ranges for rm and σ such that unique solution can be obtained. The algorithm in finding such ranges is also discussed.

Both log(rm) and log(σ) are divided into 10 intervals for generating the training and testing data. We chose the refractive index of the particle to be m = 1.53 − j0.008 and the wavelengths to be λ1 = 0.53 μm, λ2 = 1.06 μm, and λ3 = 2.12 μm. The study of β(λi) reveals that for some values of log(rm) and log(σ), the inputs are close to one another, resulting in nonunique solution for rm and σ with such β(λi). For a unique solution of rm and σ for a given β(λi), the change in β(λi) for a given change of rm and σ must be sufficiently large. Therefore we define the distance D, a measure of separation of β(λi), as

Here we have divided log(rm) and log(σ) into a number of intervals such that andIn order to ensure that the β(λi)'s are sufficiently separated, we require that D exceed a minimum distance Dm. To find Dm, we first notice that there is a large difference in magnitude between β(λi, σj, rml) and β(λi, σk, rml) for k > j. For instance, we have β(λi, σ1, rm1) ∼ 10−15 and β(λi, σM, rm1) ∼ 10−6. Thus Dm cannot be fixed for all σj but should vary according to σj. In addition, for the same σj, the value for β(λi, σj, rml) increases from l = 1 to l = N. The lowest value occurs when l = 1, Hence the minimum distance Dm is chosen proportionally to β(λi) obtained from the first mean radius rm1. Specifically,

where D1 is a constant. Thus Dm is a fixed quantity when D1 and σj are fixed. Therefore we can determine the allowable range of log(rm) for that particular log(σj), the lower and upper bounds of log(rm), by enforcing the requirement that D ≥ Dm. Similarly for each log(σj), j = 1, 2, …, we computed the corresponding allowable ranges of log(rm). From the diagram of all the allowable ranges for log(rm), we can estimate the desired region for log(rm) and log(σ).The constant D1 in Eq. (4) controls the size of the allowable region for log(rm) and log(σ). A large value of D1 will generally create a small allowable region, but the values of β(λi) are reasonably separated, and therefore unique sets of β(λi) can be obtained. On the other hand, a small value of D1 will create a large allowable region, but the sets of β(λi) are close to one another. Unique sets of β(λi) are thus difficult to obtain, resulting in a large percentage of error in obtaining the unknown size distribution. A value of D1 ranging from 0.1 to 50 has been tested for finding the suitable D1. It was found that a value of 10 for D1 is a good compromise between the percentage error and the size of the allowable region for log(rm) and log(σ). With such a value, the allowable region is found to be −0.328 ≤ log(rm) ≤ 0.44 and 0.03 ≤ log(σ) ≤ 0.5, as shown in Fig. 2.

Based on the allowable region discussed above, a group of 480 sets of data was generated from Eq. (1). In order to maximize the computing accuracy of the neural network, we first normalize all the data from zero to unity. We use 462 data sets to train the neural network. The remaining 18 sets are used to test the system. Finally, as shown in Fig. 3, the results are converted back to the original values.

Figure 3 shows the performance of the neural network in terms of absolute percentage error for log(rm) and log(σ). It is clear from the figure that an increasing number of iterations tends to cause the outputs to converge to the real value and hence lowers the absolute percentage of errors. Except when the desired outputs log(rm) and log(σ) are small, the neural network yields good results for most of the testing data, with an absolute percentage of error of less than 10%.

In summary, we have presented an inverse technique of finding particle-size distribution by using optical sensing and a neural network. The size-distribution function is assumed to be a log-normal function, so that it is characterized by a mean radius rm and a standard deviation σ. It was shown that the neural network yields good results for the testing data, with an absolute percentage of errors of less than 10% for most of the testing input β(λi). A major advantage of this technique is that, once the neural network is trained, the inverse problem of obtaining the size distributions can be solved speedily and efficiently (in a small fraction of a second) by a microcomputer workstation.

This research is partially supported by a grant-in-aid for scientific research from the Ministry of Education, Science, and Culture of Japan (Monbusho), the National Science Foundation, and NASA.

Figures

Fig. 3 Performance of the neural network in generating (a) the standard deviation of particle size, log(σ), and (b) the mean particle size, log(rm), from the given backscattered intensities, β(λi), in terms of absolute percentage error. Increasing the number of iterations tends to converge to the true value and hence lowers the absolute percentage error.

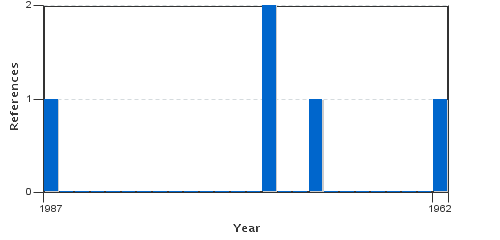

References

1. D. L. Phillips, J. Assoc. Comput. Mach. 9, 84 (1962). [CrossRef]

2. P. Edenhofer, J. N. Franklin, and C. H. Papas, IEEE Trans. Antennas Propag. AP-21, (1973).

3. G. Backus and F. Gilbert, Phil. Trans. R. Soc. London Ser. A 266, (1970).

4. E. R. Westwater and A. Cohen, Appl. Opt. 12, 1340 (1973). [CrossRef] [PubMed]

5. A. Ishimaru, Wave Propagation and Scattering in Random Media (Academic, New York, 1978), Vol. 2.

6. S. Kitamura and P. Qing, “Neural network application to solve Fredholm integral equations of the first kind,” presented at the International Joint Conference on Neural Networks, Washington D.C., June 1989.

7. M. El-Sharkawi, R. Marks II, M. E. Aggoune, D. C. Park, M. J. Damborg, and L. Atlas, “Dynamic security assessment of power systems using back error propagation artificial neural networks,” presented at the 2nd Annual Symposium on Expert System Application to Power Systems, Seattle, Wash., July, 1989.

8. L. Atlas, R. Cole, Y. Muthusamy, A. Lippman, G. Connor, D. Park, M. El-Sharkawi, and R. Marks II, “A performance comparison of trained multilayer perceptrons and trained classification trees,” Proc. IEEE (to be published).

9. D. Park, M. El-Sharkawi, R. Marks II, L. Atlas, and M. Damborg, “Electric load forecasting using artificial neural networks,” IEEE Trans. Power Syst. (to be published).

10. D. E. Rumelhar and J. L. McClelland, eds., Parallel Distributed Processing (MIT Press, Cambridge, Mass.1986).

11. R. P. Lippmann, IEEE Trans. Acoust. Speech Signal Process . ASSP-4, 4 (1987).