Overview

Single-layer

networks (figure 3.1) have just one layer of active units. Inputs connect directly

to the outputs through a single layer of weights. The outputs do not interact

so a network with Nout outputs can be treated

as Nout separate single-output networks. Each

unit (figure 3.2) produces its output by forming a weighted linear combination

of its inputs which it then passes through a saturated nonlinear function

| (3.1) |  |

| (3.2) |  |

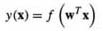

This can be expressed

more compactly in vector notation as

| (3.3) |  |

where

x

and

w are column vectors

with elements

xj

and

wj,

and the superscript

T

denotes the vector transpose. In general,

f

is chosen to be a bounded monotonic function. Common choices include the sigmoid

function

f(u)=1/(1+exp(-u))

and the tanh function. When

f

is a discontinuous step function, the nodes are often called linear threshold

units (LTU). Appendix D mentions other possibilities.